Some dense reading about preseason ratings

Well, here we are. The season is about to start and thus, it’s time for my annual plea to treat preseason ratings with their proper respect. This is not to overstate their predictive ability, but even in an era of unprecedented player movement, preseason ratings are still clearly better than a random number generator. They’re even better than just using ratings from the end of last season. In fact, the preseason ratings here are as useful as they’ve always been, even with the increased challenges of recent seasons.

To get serious about the performance of preseason ratings, we should look at their performance across every team. There are a couple of accepted options. First, there’s the root mean squared error. Take the change in net rating for each team from preseason to the end of season, square it, average it, and get the square root of that number. Lower is better. That’s the blue line in the left plot below.

The other measure is correlation. It’s basically (but not exactly) a measure of how similar the order of the teams was from the preseason to the end of the season. Higher is better. That’s the red line in the middle plot. I tend to prefer that one since we really care about the order. And if the order is close, it’s simply a matter of properly regressing (or “pro”-gressing) the ratings to minimize the error.

I’ve added a linear trend to the plots. The end points do some work here, but the trend in both measures has improved over the 13 seasons I’ve done preseason ratings. Last year wasn’t the best year by either measure; it was fifth-best by correlation and fourth-best by RMSE. Still, not bad under the circumstances.

And the green plot on the right describes those circumstances. Minutes continuity, after hanging around 50% for the mid 20-teens, has steadily dropped as restrictions on player movement have been removed. Last season it dropped below 40% for (I’m assuming) the first time in history1.

One thing that has dropped along with roster continuity is the correlation of team ratings from year to year. Last year’s correlation from the previous season was the second-lowest in the last 26 years, and two years ago was the third-lowest. (I’m not sure what was going on in 2012, but it reigns as the worst year-to-year correlation for now.)

The most important tool in predicting team performance is past team performance and one thing these graphs suggest is that past performance is less important that it used to be. So predicting team performance prior to the season figures to become more difficult with increased player movement.

Sometimes, when people feel all insecure and want to pump up how good college basketball is, they’ll talk about how anyone can beat anyone. But the reality is there just isn’t a lot of change in the upper or lower tiers of conferences over time.

It’s obviously not complete mayhem these days. The best programs still get first pick at the transfer portal. Still, last season was Kansas’s worst rank ever over the history of my ratings. UCLA went from a two-seed to a losing record. Miami went from the Final Four to a losing record. Arkansas went from the Sweet Sixteen to a losing record.

There are certainly some drawbacks to all of the player movement, but to me, one of the benefits is that it’s not a given that a particular program will be good (or bad) forever. Now more than ever, past success is no guarantee for future success.

When I first started doing preseason ratings, I didn’t even include transfers. Using program history and returning production was good enough. Last offseason I did a fair amount of work to improve the performance of the preseason ratings for our new world. The results were encouraging but some or even most of this could be luck. The annual error plot indicates there was a lot of variability in past seasons and spoiler, I wasn’t changing the preseason algorithm every season.

But the following plot is promising. It compares the year-to-year correlation of team ratings to the preseason-to-final correlation of team ratings for each season.

You can see these lines generally move together. Historically, when season-to-season correlation has been higher, the preseason ratings have performed better. Last year, though, things were different. This relationship decoupled: Year-to-year correlation was as low as it’s been since I started doing preseason ratings and yet the ratings still did well.

I don’t want to overstate things. As I discussed in last season’s post, there is quite a bit of uncertainty in the preseason ratings. Any prediction system is only going to do so well. And even the end-of-season ratings themselves are far from a perfect measure of a team’s true ability. So don’t worry, there will still be some big misses.

Like you, I’m not comfortable with Villanova at 20th or Kentucky at 43rd. Dayton at 27 seems high. But given that the ratings work well for the vast majority of teams, I don’t want to completely dismiss the oddballs, either. For me, it’s always fun to look back on the previous season’s ratings and see how my outliers did. I’m not going to do that here, because last season there were more than ever, but needless to say some hit and some missed. But enough hit to make me feel comfortable with the preseason algorithm. I will always have South Carolina and McNeese, which we had 19 and 33 spots, respectively, than any other system.

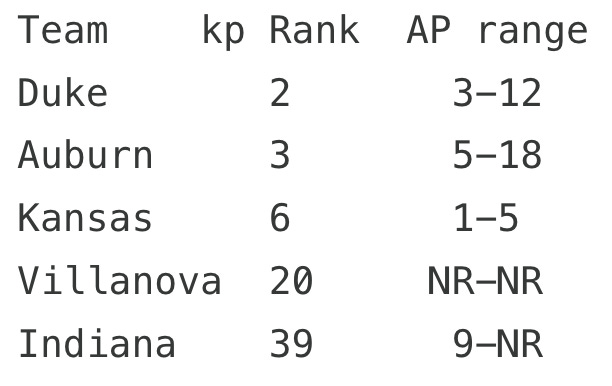

And finally, I’ll make my annual note that there’s too much herding among AP voters. Here are the five teams I have ranked outside the range of the 60 voters:

Administrative note: I updated the ratings over the weekend based on additional player news since the original release. I also found a few dozen players missing from my original rosters, mainly affecting teams in one-bid conferences.

Spoiler alert: It will be even lower this season.